Global governance shift in perception of AI, but more debate is crucial

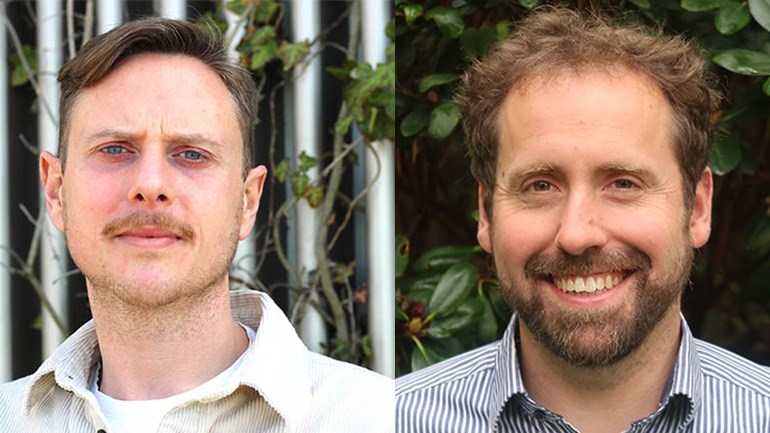

Jason Tucker (left) and Michael Strange (right) have co-authored an article which examines a shift in three global organisations’ perception of the benefits of AI.

For three global organisations, the discourse around AI has shifted from negative concerns about privacy and the mass collection of data to more positive debates about how it can be used as a tool and a facilitator of change, so find Malmö University academics in a recently-published article.

Michael Strange, an associate professor in international relations, tracked the UN Human Rights Office, the WHO, and UNESCO from 2014-21 and observed that while the changes are positive, there still needs to be more debate about AI, in particular its use in relation to delivering healthcare.

This is a fundamental change, but we are treating it as if it is just a technicality.

Michael Strange

“What has shifted is the range of voices, and the way AI is talked about has broadened; more critical voices have been drowned out by louder voices about AI being a tool and how it can be facilitated,” says Strange, who co-authored the article, Global governance and the normalization of artificial intelligence as ‘good’ for human health.

Strange found the pandemic was particularly influential: “With the pandemic there was a massive acceleration of data intensive technology in healthcare. Today we are using this label artificial intelligence, but AI is by no means one technology. If you look at it as a political or social scientist, you could ask ‘Is AI really a technology, or is it just another way of organising society?’.”

AI is now not just talked about as a technology but also questions arise as to who should be the key providers of different services. In the case of healthcare, many assume that it should be the private sector that should lead.

“The talk of crisis has moved away from the pandemic to now talking about a broader crisis in healthcare, with issues around labour shortages and increased demand.

“What we see is a shift in the perception of who should provide the tools and infrastructure around healthcare. AI might just be a tool that you can physically pick up, but when it is data and it learns from that data, it is not just a single tool, it is a dynamic infrastructure and, in that regard, it is very difficult to change between tools over time.”

Strange and Tucker highlight two key assumptions: firstly, that AI is the future of healthcare, and secondly, that the private sector should be the provider of this. But there is also an issue of accountability:

“Perversely, there is this idea that the public sector is important as the responsible actor, so when things go wrong, it steps in. The question is though, to what extent can it step in if it has not developed the technology and doesn’t own the technology or the data?”

“This is a fundamental change, but we are treating it as if it is just a technicality, like buying a new iPhone. To be able to run a functioning healthcare system, it is very important that we can have a mature, transparent, democratically accountable conversation.

While this shift in perspective can regarded as positive, Strange notes that it reinforces the idea that AI is a single technology, and that AI is inherently good for healthcare. There is also a silence around this shift relating to the state versus the free market. “If we are silent about this discussion then we are saying it is not an important question,” comments Strange, adding:

“What we need in this area is more open debate, is it inherent that the market should provide AI? We need to ask why the state is stepping back. There is a huge void in the debate, considering the seismic shift AI can bring.”